Our research revealed how attackers could leverage Hugging Face, the popular AI development and collaboration platform, to carry out an AI supply chain attack that could impact tens of thousands of developers and researchers. The attack, dubbed "AIJacking", is a variant of the infamous RepoJacking attack. The attack could lead to remote code execution and hijacking heavily used models and datasets from Hugging Face with over 100,000 downloads. The research techniques we employed, presented in this article, show how easy it is to exploit the vulnerability.

As we see growth in the adoption of generative AI, more and more attackers are targeting the AI supply chain to infiltrate organizations. OWASP covers these risks with the top 10 for LLM and the top 10 for ML security. AIJacking is an attack that relates to the following risks:

What Is Hugging Face?

Hugging Face is the most popular hub for open-source machine learning projects. It hosts models and datasets to be used by the AI community for research, development, and commercial usage. In AI, models are frameworks that learn patterns from data, and datasets are collections of information used to train and evaluate those models.

HugginFace has become increasingly popular in recent years due in large part to the rise of generative AI. One of the most popular LLMs on Hugging Face is GPT, the foundation model behind OpenAI's ChatGPT.

If an attacker were to compromise Hugging Face's software, they could gain access to these critical applications and cause widespread damage.

Model and dataset name changes are expected within Hugging Face, as organizations may evolve through collaborations, restructuring, or the need to adopt new naming conventions.

When such changes occur, a redirection is established to prevent disruptions for users relying on models or datasets whose names have been altered; however, if someone registers the old name, this redirection loses its validity.

AIJacking is an attack where a malicious actor registers a model or dataset name previously used by an organization but since renamed within Hugging Face.

By doing this, any project or algorithm that depends on the original model or dataset will inadvertently access the contents of the attacker-controlled model or dataset. This could lead to the unintentional retrieval of corrupted or malicious data, with potentially severe consequences for machine learning processes and applications.

Below, we'd like to share how we have found vulnerable projects and demonstrate an exploitation process.

Proof Of Concept

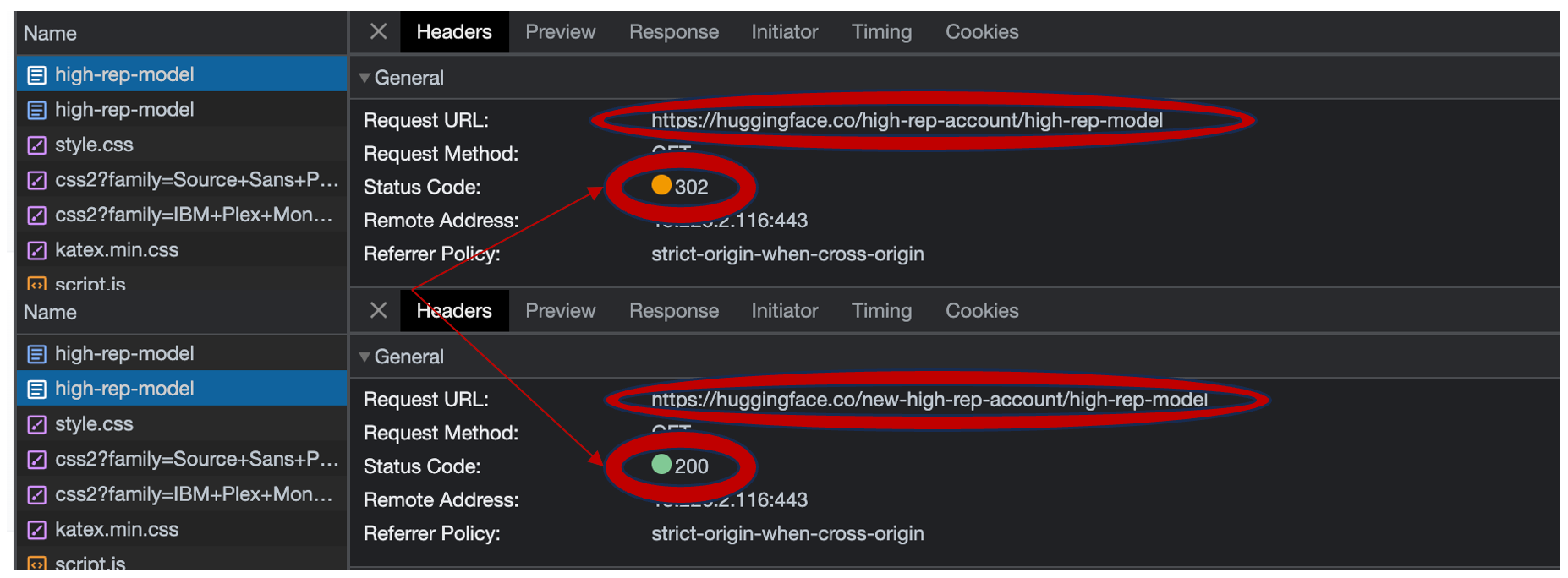

First, we needed to examine whether AIJacking was even valid on Hugging Face. As seen in the following image, we have renamed our account “high-rep-account“ to “new-high-rep-account“ and watched how the requests got redirected. We then created a new account and observed how the "high-rep-account" profile was available for takeover.

How Did We Find Vulnerable Projects?

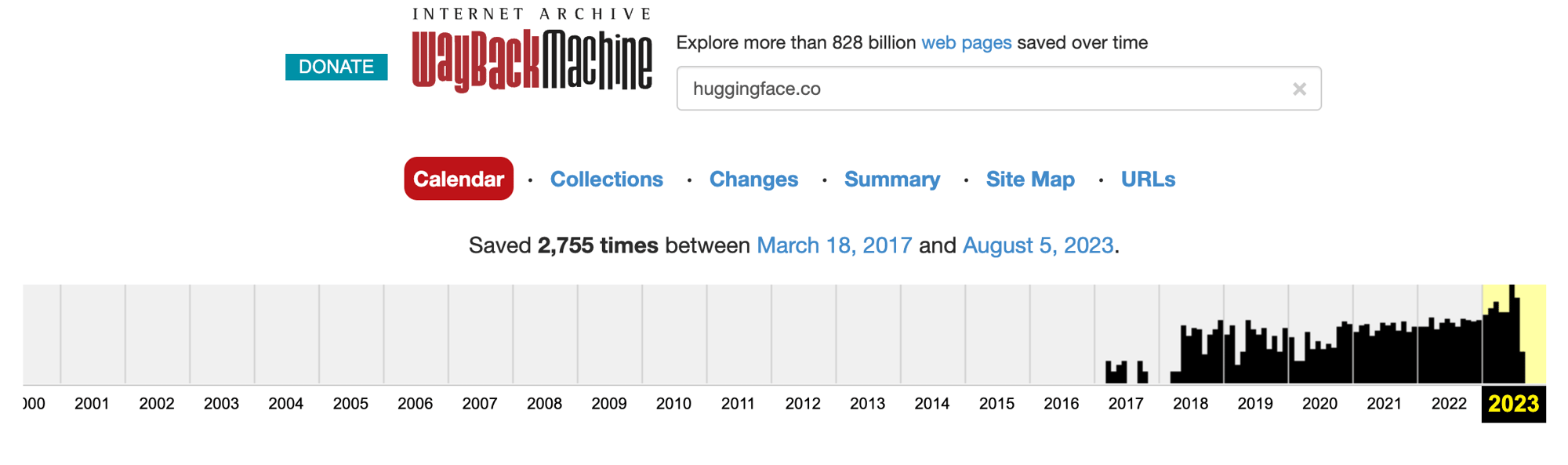

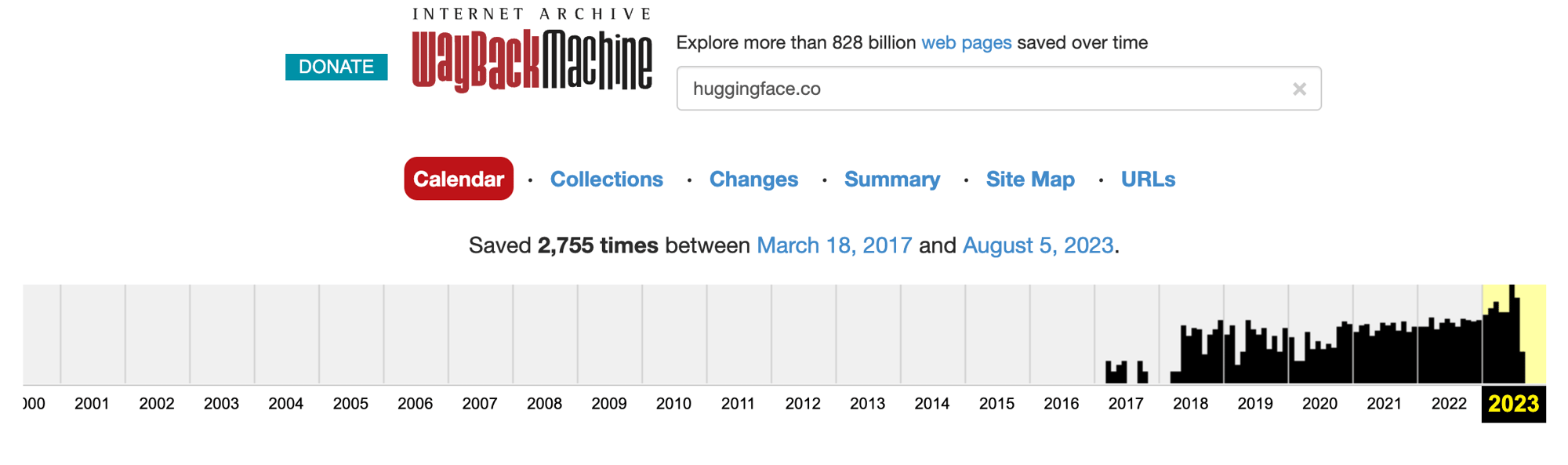

Unlike other popular SCM (source code management) platforms such as GitHub and GitLab, Hugging Face does not have a data archive to inspect its project's history and modification. Instead, to determine which models and datasets were previously hosted on Hugging Face, we used Wayback Machine, an internet archive that allows the public to browse older versions of websites such as Hugging Face.

Hugging Face's history

According to those resources, the endpoints models and datasets were first introduced in 2020, and since then, their popularity has grown.

Note: The following parts show how we performed the attack for the models endpoint, but the exploitation for the datasets endpoint is based on the same technique.

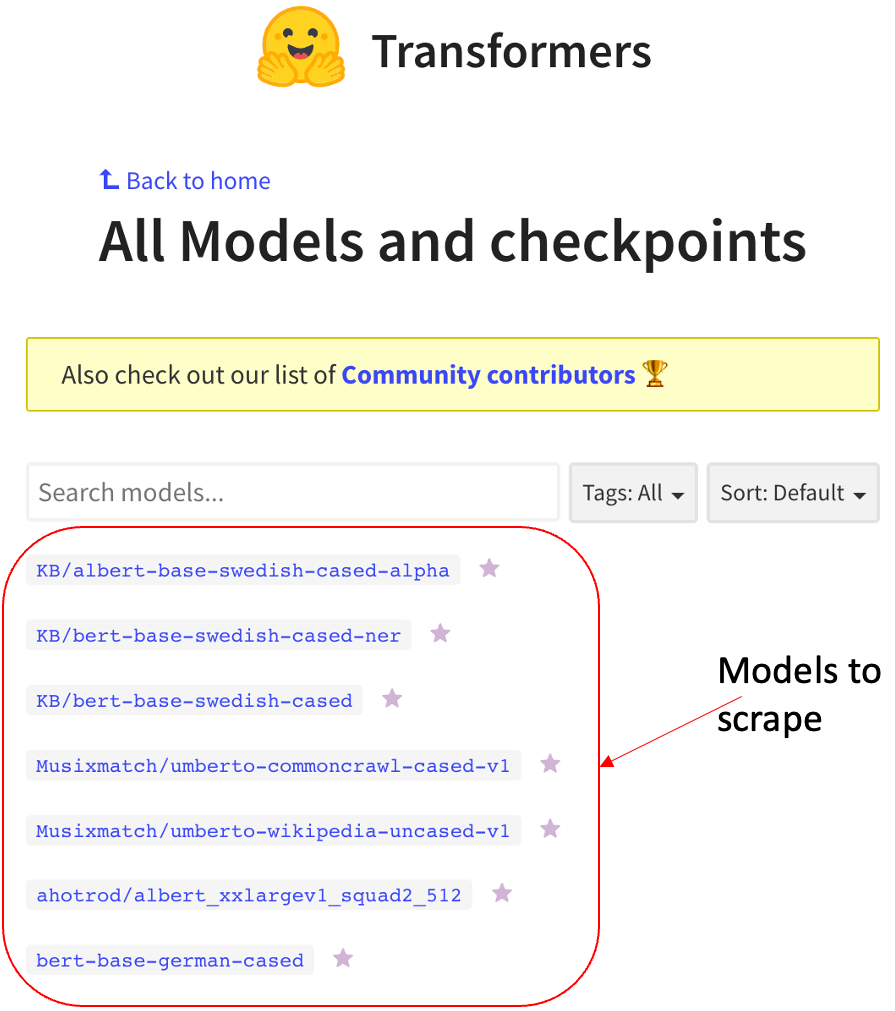

We sampled several dates in the archives and then scraped each sample to get the names of the hosted models. Since Hugging Face changed its face during its history, the scraping code must be modified according to the site’s version. For example, the following image shows how the models endpoint looked in Hugging Face on June 2020.

Hugging Face on June 2020

After we finished collecting a list of model names and organizations, we tried reaching every one of these models. Once we have found an endpoint that leads us to a redirect, we verified that the old account name was changed, which makes it vulnerable to a hijacking attack. We have found tens of accounts vulnerable. However, not all pages were cached in the WaybackMachine, so we suspect the number of potentially vulnerable projects is much higher.

Disclosure

We’ve contacted Hugging Face, described the issue, and advised on adding a retirement mechanism, as GitHub did. They replied that they already have an internal mechanism for popular namespaces, but our research shows it's not effective.

For example, we were able to AIJack the namespace "ai-forever" by taking over its formerly known name as “sberbank-ai”. The multiple models inside this namespace have over 250k downloads in total. The old name (sberbank-ai) was not retired by any automated mechanism, and only after we reached out to Hugging Face they told us they manually added it to the retirement blocklist due to our report.

We don’t know if they have made any alteration to the retirement mechanism since we initially contacted them, as they did not respond further. However, 250k+ downloads is a large number. It's one example of a popular project that was left vulnerable and could put many users at risk.

How Do I Check If My Organization Is Impacted?

To check if AIJacking impacts your organization, find out which repositories use Hugging Face models or datasets and identify which leads to a redirect. Doing this manually might be difficult, and we invite you to contact us for help.

How To Mitigate The Risk?

To avoid the risk, Hugging Face's official advice is to always pin a specific revision when using transformers, which will lead to the download failing in the case of AIJacking. But in case this solution doesn’t fit your needs, it’s essential to follow these mitigation steps.

The first thing you need to do is map the potentially vulnerable projects you are using. If one of the projects you are using has been found suspected to be vulnerable to AIJacking, we strongly advise taking the following actions:

-

-

Constantly update redirected URLs to be the same as the resolved redirected endpoint

-

Make sure you follow the Hugging Face security mitigations, such as setting the trust_remote_code variables to False

-

Always be alert to changes and modifications in your model registry

-

Legit Security’s AI Guard Is Here To Help!

Legit's AI Guard introduces new security controls to enable organizations to build ML applications quickly, ensuring your development teams can integrate models and datasets from Hugging Face without exposing themselves to known risks. Legit's AI Guard identifies and alerts whenever a repository in your organization is vulnerable to AIJacking or different types of supply chain attacks.

Download our new whitepaper.

-1.jpg)