Legit Security is the first ASPM platform with advanced capabilities to secure generative AI-based applications and bring visibility, security, and governance into code-generating AI. Millions of developers are using AI-based code assistants such as GitHub Copilot or Tabnine, but along with the great adoption a wide range of new risks have emerged which we summarize in this article.

Contribution of Vulnerable Code

Code assistants are created by analyzing millions of publicly available code examples across the web. Given the immense amount of unverified code these large language model assistants have encountered and processed, it's inevitable that they've also learned from vulnerable code that contains bugs. A study has discovered that nearly 40% of the code that GitHub Copilot generated has vulnerabilities. This means that for applications your business relies on, you need to consider enforcing more controlled or manage usage of code assistants.

Legal and Licensing Issues

AI-generated code suggestions are lifted from publicly available and open-source software, and failing to identify or attribute the original work violates open-source licenses. The implication is that organizations that use Copilot are subject to the same risk.

In November 2022, Matthew Butterick and the law firm Joseph Saveri filed a class-action lawsuit against GitHub, Microsoft, and OpenAI, alleging that Copilot violates the copyrights of developers whose code was used to train the model. The lawsuit is still ongoing. The plaintiffs are seeking damages and an injunction against GitHub, Microsoft, and OpenAI.

@github copilot, with "public code" blocked, emits large chunks of my copyrighted code, with no attribution, no LGPL license. For example, the simple prompt "sparse matrix transpose, cs_" produces my cs_transpose in CSparse. My code on left, github on right. Not OK. pic.twitter.com/sqpOThi8nf

— Tim Davis (@DocSparse) October 16, 2022

The lawsuit raises important legal questions about the use of AI-powered coding assistants. It is still too early to say how the lawsuit will be resolved. While the use of GitHub Copilot is still being debated, if your company uses GitHub Copilot and is later found that the service violates copyright law or the terms of the open-source licenses that govern the code that is used to train the model, then your company could be held liable.

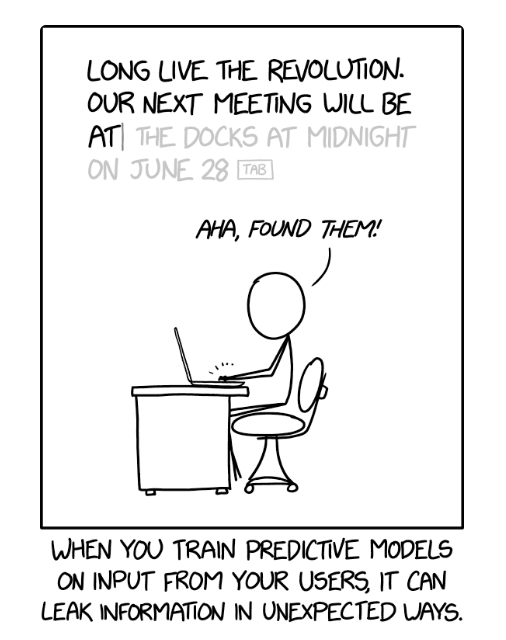

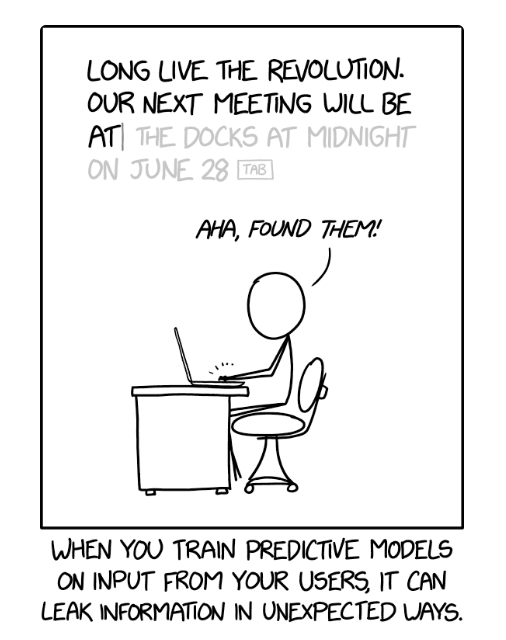

Privacy and Intellectual Property Theft

Using code assistants raises data privacy and protection concerns. Developers’ code might be stored in the cloud, and sensitive data might be compromised if the cloud service is not secured. Leading companies such as Apple and Samsung restrict their employees from using AI code assistants to prevent private information leaks. As we mentioned before, secrets in code are a critical problem, and it’s bad enough to accidentally push a secret to your open source, but it's worse when a code assistant is using that secret as part of a code suggestion.

A real example of leaked API key suggested by Copilot

Even though GitHub has updated their model to prevent from revealing sensitive information, it heavily relies on GitHub’s own secrets mechanism, which could miss specific secret types.

Legit Security Can Govern and Protect Against AI Code Generation

Legit has developed features for code generation detection and provides robust insight into repositories accessible by users using code generation tools such as GitHub Copilot. Organizations can now quickly identify which repositories have been influenced by automatic AI code generation, providing a deeper understanding of what code in their code base is affected by AI.

In addition, we've improved incident tracking by highlighting when users install code generation tools. These capabilities are designed to provide more transparency and accountability, giving organizations more control over their code-generation processes. Ready to learn more? Schedule a product demo or check out the Legit Security Platform.

-1.jpg)